Nvidia G-Sync Review - Introduction

Manufacturer: NvidiaUK Price: ~£100 for the board, £440 for the monitor

US Price: ~$100 for the board, $400 for the monitor

At their respective latest big graphics card launch events AMD and Nvidia each announced a proprietary new graphics technology that they thought would be the next big thing. AMD went with performance enhancement in the shape of a new API called Mantle that potentially boosts performance on certain AMD graphics cards. The tactic plays on the fact its chips are inside the PS4, Wii U and Xbox One and that developers will be more willing to dig deeper into the inner workings of AMD’s chips to extract performance. We’re yet to see the fruits of this.

Nvidia, on the other hand, in a move that ties in with many of its more recent announcements such as FaceWorks, Waveworks and GeForce Experience, went for an experience enhancing technology: one that aims to make gaming more enjoyable, regardless of how fast your card is. That technology is called G-Sync.

G-Sync is a hardware solution for allowing Nvidia graphics cards to control the refresh rate of your monitor, with the benefit being the elimination of tearing and stutter for a much smoother, graphically more satisfying experience.

The principle of G-Sync is really quite simple. Normally monitors have a fixed refresh rate that’s generally 60Hz but can be as high as 120Hz or 144Hz for some gaming/3D monitors. In contrast, GPUs deliver newly rendered frames to the monitor whenever the GPU is done processing them. This creates two problems: tearing and stutter.

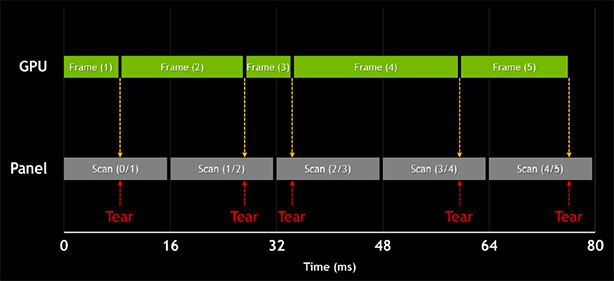

Tearing occurs when the GPU delivers a new frame to the monitor before the last one has finished, creating a step change in the image shown on screen. The effect is most obvious when panning left or right as the vertical edge of objects such as trees, lamp posts, buildings and NPCs will be broken up. The faster the framerate, the more steps you’ll see.

This phenomenon has been known about for a long time, though, and has long had a solution in the form of V-Sync. This simply tells the monitor to only display complete frames, instantly eliminating tearing. However, V-Sync has its own issues.

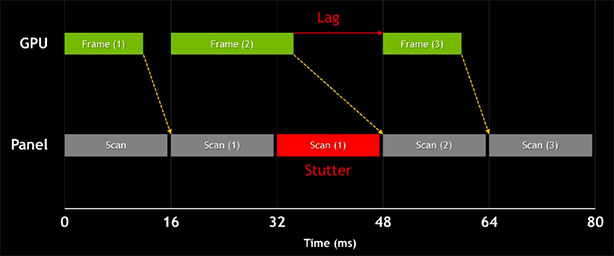

While the monitor will now only display whole frames there’s no guarantee that the GPU can deliver a new frame in time for each refresh of the monitor’s panel. At lower framerates in particula, say 30-40fps, the card will regularly be under delivering frames forcing the monitor to repeat the previous frame it was showing. This wouldn’t be so bad if it consistently doubled every frame – it would just look like 30fps – but because of the inconsistency of framerate and the regularity of the refresh rate it may be that within a second the monitor has to repeat one frame three times the next frame is shown only once then the next is shown twice.

This inconsistency (and that really is the key – the eye/brain is very good at filling in the blanks if things are delivered consistently) creates a stuttering effect that, while definitely less obvious than tearing, is still a far from perfect experience. However, if your graphics card can consistently deliver a framerate higher than that of your monitor then stuttering is all but eliminated with V-Sync enabled.

All of which brings us to G-Sync. This new technology aims to eliminate both these issues be making the display not just wait for a new frame before changing the old one but to wait for the GPU to tell it when to refresh at all. So whereas with V-Sync the display will constantly be waiting for or lagging behind the framerate, with G-Sync they should be perfectly in sync, creating a much smoother experience.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.